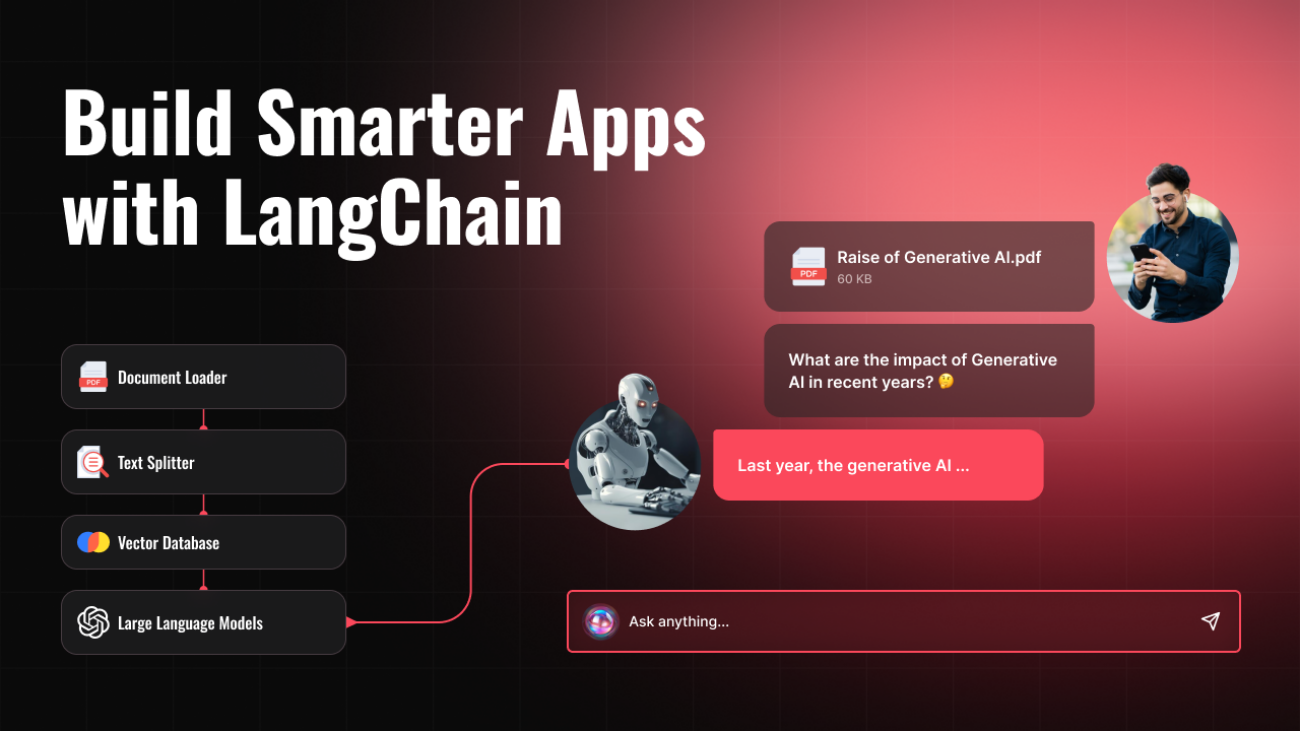

The LangChain framework provides versatile tools for building interactive applications with large language models. Using LangChain, we can seamlessly integrate and swap different data stores, LLM models, and utility tools while developing AI applications. In this article, we will explore how to build an application using OpenAI and the LangChain framework, along with opportunities for further scalability.

OpenAI without LangChain

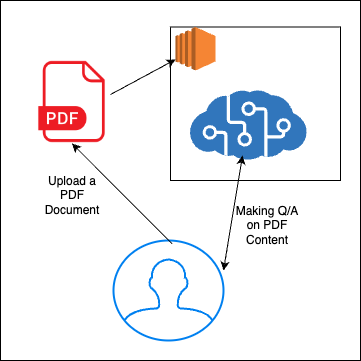

Let’s consider an app using OpenAI, where we upload a research file in PDF format and use a Q&A prompt to ask queries based on its content.

To build this:

- We need to upload the PDF file to our system.

- Extract the text content from the file.

- Separate the content into smaller chunks (uploading the entire content to OpenAI would increase costs).

- Provide context for each chunk, so that when we receive a query, we can send the relevant chunk to Machine learning.

- Make an API call to OpenAI to get a response.

- Preserve the context of responses, as AI does not retain the previous context.

With LangChain, we can build each of these steps with minimal configuration, and in most cases, the framework provides various tools and vendor options.

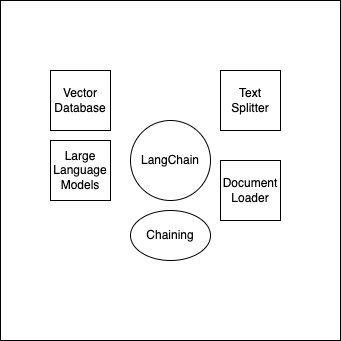

LangChain Assistance

LangChain has a built-in method to load and extract data from various formats, including PDF, text, and markdown. It can even load and extract content from all files within a cloud directory, like S3.

To create smaller chunks, LangChain offers TextSplitters. By specifying the chunk size, we can obtain smaller sections from the content.

To isolate these chunks, LangChain extracts metadata for each chunk and stores them in popular vector databases like FAISS, Pinecone, or Chroma. To simplify integration, LangChain provides a wrapper for interacting with these database APIs.

After receiving the user’s query, we need to send the query along with the relevant content chunks to OpenAI. LangChain includes built-in methods for this, and we can even swap AI applications for other popular LLM models, including those hosted on an on-premise server.

As OpenAI does not retain conversation history, LangChain’s memory function helps generate and save the context of previous interactions, allowing continuity in subsequent conversations and queries.

Hello World

We will start with building a Hello World categorized applications using Python and LangChain. A few requirements are,

- Python version has to be 3.11

- Must have a paid plan in the OpenAI platform

We are going to use the following package and version,

langchain = "==0.0.352"

openai = "==0.27.8"Code language: JavaScript (javascript)Considering, having an OpenAI secret key, and an appropriate Python package version, our simple code will be as follows,

from langchain.llms import OpenAI

# API key retrieved from the OpenAPI Platform

api_key = "my_secret_api_key"

llm = OpenAI(

openai_api_key=api_key

)

result = llm("Write a very very short poem")

print(result)Code language: PHP (php)It will generate a poem similar to the following,

Rain falls, sky cries

Nature’s tears, love never dies

Optimize Chat App

We will build a console application where the user can make queries similar to ChatGPT. We’ll capture user input and send it to OpenAI to receive a response. Each time we get a response, we will update the user’s Q&A context. For subsequent queries, we’ll attach this context when sending the query to AI to ensure relevant responses.

from langchain.chat_models import ChatOpenAI

from langchain import LLMChain

from langchain.prompts import MessagesPlaceholder, HumanMessagePromptTemplate, ChatPromptTemplate

from langchain.memory import ConversationSummaryMemory

from dotenv import load_dotenv

load_dotenv()

chat = ChatOpenAI(verbose=True)

memory = ConversationSummaryMemory(

memory_key="messages",

return_messages=True,

llm=chat

)

prompt = ChatPromptTemplate(

input_variables=["content", "messages"],

messages=[

MessagesPlaceholder(variable_name="messages"),

HumanMessagePromptTemplate.from_template("{content}")

]

)

chain = LLMChain(

llm=chat,

prompt=prompt,

memory=memory,

verbose=True

)

while True:

content = input(">> ")

result = chain({"content": content})

print(result["text"])

Code language: JavaScript (javascript)The output will be,

When the dotEnv is used for environment, the LangChain automatically fetch the OpenAI key from name

OPENAI_API_KEY

Conclusion

LangChain opens new horizons for building AI applications with large language models. Offering flexible options for every component, it provides a future-proof solution from both development and business standpoints.

Experience the iXora Solution difference as your trusted offshore software development partner. We’re here to empower your vision with dedicated, extended, and remote software development teams. Our agile processes and tailored software development services optimize your projects, ensuring efficiency and success. At iXora Solution, we thrive in a dynamic team culture and experience innovation in the field of custom-made software development.

Have specific project requirements? Personalized or customized software solutions! You can contact iXora Solution expert teams for any consultation or coordination from here. We are committed to maximizing your business growth with our expertise as a custom software development and offshore solutions provider. Let’s make your goals a reality.

Thanks for your patience!

Add a Comment