As developers, while we try to run an existing code base, we often have to troubleshoot environment issues. This could be a dependency issue, module installation problem, or environment mismatch.

Docker, at its core, is trying to fix these problems. Docker is trying to make it super easy and really straight-forward for anyone to run any code-base or software on any pc, desktop, or even server.

In a nutshell, Docker makes it really easy to install and run software without worrying about setup and dependencies.

According to Wikipedia,

Docker is a set of the platform as a service (PaaS) products that use OS-level virtualization to deliver software in packages called containers.

Just like we can manage our application, docker enables us to manage the application infrastructure as well.

In this article, we will get an overview of how docker isolates and governs the resource usages in our development machine.

How OS runs on the machine?

Let’s get familiar with a couple of OS components,

Kernel: Most OS has a kernel. It runs the software processes that govern the access between all the programs running on the computer and all the physical hardware connected to the computer.

For example, If we write a file on a physical hard-disk using node.js, it’s not node.js that speaking directly to the physical device. Actually, node.js make a system call with the necessary information to the kernel and the kernel persists the file in the physical storage.

So the kernel is an intermediary layer that governs the access between the program and physical devices.

System Call: The way a program pass instruction to the kernel is called a system call. These system calls are very much like function invocation. The kernel exposes different endpoint as system call and program uses these system calls to perform an operation.

For example, to write a file, the kernel exposes an endpoint as a system call. A program like node.js invokes that system call with necessary information as parameters. These parameters can contain the file name, file content, etc.

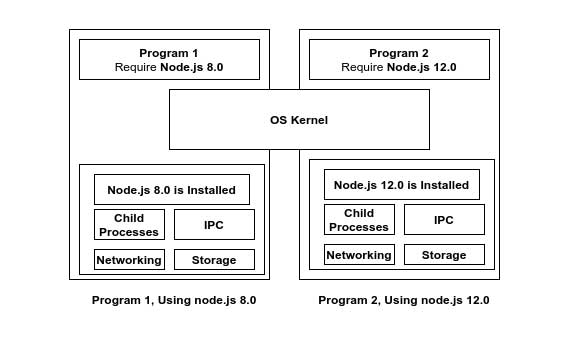

A Chaos Scenario: Let’s consider a situation, where there are two programs that require two types of node.js runtime.

- Program 01 (Require Node.js 8.0)

- Program 02 (Require Node.js 12.0)

Since we can not install these 2 versions in runtime, only one program can work at a time.

A Solution With Namespace: Using namespace, logically, we can separate hard-disk into multiple parts.

For example, to use two versions of Node.js during runtime, one of the segment will contain node.js runtime version of 8.0 and another segment will contain node.js runtime version 12.0

In this way, we can ensure the program 01 will use the segment of node.js with 8.0 runtime and the program 02 will use the segment of node.js 12.0 as runtime.

Here the kernel will determine the segment during system call by the programs. So for program 01, the kernel drags the system call from the segment with 8.0 node js and for program 02 the kernel will drag the system call from the segment with 12.0 node.js runtime.

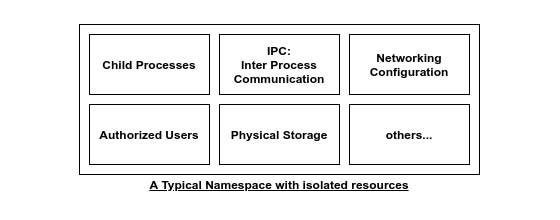

Selecting segment, using a system call, based on the program is called namespacing. This allows isolating resources per process or a group of processes.

A namespace can be expanded for both cases

- Hardware Elements

- Physical Storage Device

- Network Devices

- Software Elements

- Interprocess communications

- Observer and use of processes

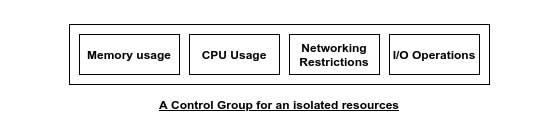

Control Group: Also known as cgroup. This limits the number of resources that a particular process can use.

For example, it determines,

- The amount of memory can be used by a process

- The number of I/O can be used by a process

- How much CPU a process can use

- How much Network bandwidth can a process use

Namespacing Vs cgroup: Namespacing allow restricting using a resource. While the cgroup restrict the number of resources a process can use.

Utilizing Namespacing in The Docker Container

Docker container is a group of

- Process or Group of processes

- Kernel

- Isolated resource

In a container kernel observes the system call and for a process, a guide to the specified isolated resources.

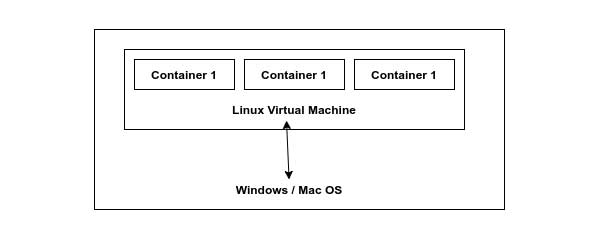

The windows and Mac OS does not have the feature of

namespacingandcgroup. When we install docker in these machines, a Linux virtual machine is installed to handle the isolation of resources for the processes.

Relations Between Image and Container: An image has the following components

- File System snapshot

- Startup commands

When we take an image and turn it into a container, the kernel isolated the specified resource just for the container. Then the process or file system snapshot takes place in the physical storage.

While we run the startup commands, it installed these processes from the physical storage and start making the system call using the kernel.

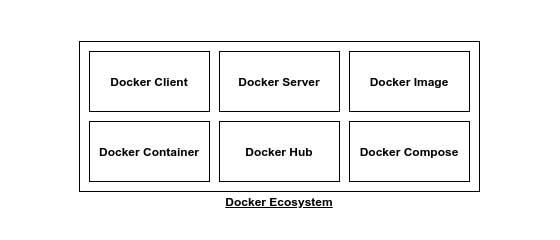

Docker Ecosystem

Dockers ecosystem contains

- Docker Client

- Docker Server

- Docker Machine

- Docker Image

- Docker Hub

- Docker Compose

Docker Image: Single file with all the dependencies and config required to run a program.

Docker Container: Instance of the Docker Image.

Docker Hub: Repository of free public Docker Images, can be downloaded to the local machine to use.

Docker Client:

- Took the command from the user

- Do the pre-processing

- Pass it to the

Docker Server Docker Serverdo the heavy processing

A Docker Example Hands-On:

I don’t want you to be disappointed, Assuming you have already installed docker in your system, let’s run,

docker run hello-world

- It simply running an

containerfrom the imagehello-world - This

hello-worldis a tiny little program, whose sole purpose is to printHello from Docker! Docker Servercheck thelocal image cache. If it does not exist in the local image cacheit goes toDocker Huband downloads theimage`.- Finally the

Docker Serverruns theimageascontainerorimage instance. - If we run the same command again and the

imageis already in the cache, It does not download it from the docker Hub`.

Docker Lifecycle

When we run a docker container using run command,

docker run image_name

Then, the docker run is equivalent to the following 2 commands:

docker createdocker start

With docker create, the file system snapshot of the image is being copied to isolated physical storage.

Then with docker start we start the container.

Example:

Let’s do the hands-on what we are claiming with an image hello-world.

docker create hello-world

This will return the id of the created container.

Using the id we can now start the docker.

docker start -a id

This will give us the output Hello from Docker!.

Here the -a flag watch out the container output and print it in console.

Hope this gives an idea of how docker works internally. Feel free to leave any comments for clarification, changes, or improvements. Also, you can contact with iXora Solution expert teams for any consultation or coordination from here.

Add a Comment